You Might Also Think This Is Unfair

I gave this talk, “You Might Also Think This Is Unfair: Operationalizing Fairness and Respect in Information Systems”, on March 24, 2022 for the University of Michigan School of Information.

Abstract

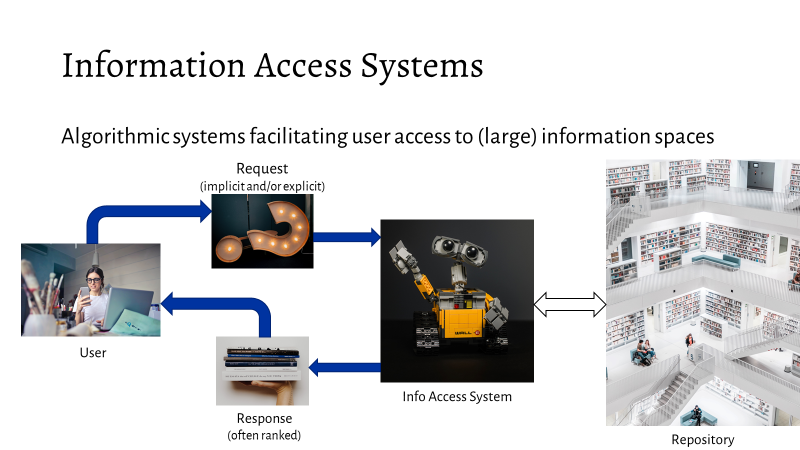

Every day, information access systems mediate our experience of the world beyond our immediate senses. Google and Bing help us find what we seek, Amazon and Netflix recommend things for us to buy and watch, Apple News gives us the day’s events, and LinkedIn helps us find new jobs. These systems deliver immense value, but also have profound influence on how we experience information and the resources and perspectives we see. The influence and impacts of these systems raise a number of questions: how are the costs and benefits of search, recommendation, and other information access systems distributed? Is that distribution equitable, or does it benefit a few at the expense of many? Are they designed with respect for their users, producers, and other affected people?

In this talk, I will discuss how to locate specific questions about the equity of an information access system in a landscape of harms, present some of my own group’s work on quantifying and measuring systematic biases, and look to a future of engaged, human-centered research and development on information access systems grounded in the dignity and well-being of everyone they affect.

My Research

These papers provide more details on the research I presented. Many of them have accompanying code to reproduce the experiments and results.

, , , and . 2022. Fairness in Information Access Systems. Foundations and Trends® in Information Retrieval 16(1–2) (July 2022), 1–177. DOI 10.1561/1500000079. arXiv:2105.05779 [cs.IR]. NSF PAR 10347630. Impact factor: 8. Cited 271 times. Cited 108 times.

, , , , , , and . 2018. All The Cool Kids, How Do They Fit In?: Popularity and Demographic Biases in Recommender Evaluation and Effectiveness. In Proceedings of the 1st Conference on Fairness, Accountability and Transparency (FAT* 2018), Feb 23, 2018. PMLR, Proceedings of Machine Learning Research 81:172–186. proceedings.mlr.press/v81/ekstrand18b.html. Acceptance rate: 24%. Cited 377 times. Cited 229 times.

and . 2021. Exploring Author Gender in Book Rating and Recommendation. User Modeling and User-Adapted Interaction 31(3) (February 2021), 377–420. DOI 10.1007/s11257-020-09284-2. arXiv:1808.07586v2. NSF PAR 10218853. Impact factor: 4.412. Cited 228 times (shared with RecSys18). Cited 117 times (shared with RecSys18).

, , , , and . 2018. Exploring Author Gender in Book Rating and Recommendation. In Proceedings of the 12th ACM Conference on Recommender Systems (RecSys ’18), Oct 3, 2018. ACM, pp. 242–250. DOI 10.1145/3240323.3240373. arXiv:1808.07586v1 [cs.IR]. Acceptance rate: 17.5%. Citations reported under UMUAI21. Cited 117 times.

, , , and . 2020. Comparing Fair Ranking Metrics. Presented at the 3rd FAccTrec Workshop on Responsible Recommendation at RecSys 2020 (peer-reviewed but not archived). arXiv:2009.01311 [cs.IR]. Cited 46 times. Cited 30 times.

, , , , and . 2020. Evaluating Stochastic Rankings with Expected Exposure. In Proceedings of the 29th ACM International Conference on Information and Knowledge Management (CIKM ’20), Oct 21, 2020. ACM, pp. 275–284. DOI 10.1145/3340531.3411962. arXiv:2004.13157 [cs.IR]. NSF PAR 10199451. Acceptance rate: 20%. Nominated for Best Long Paper. Cited 232 times. Cited 181 times.

, , and . 2021. Pink for Princesses, Blue for Superheroes: The Need to Examine Gender Stereotypes in Kids’ Products in Search and Recommendations. In Proceedings of the 5th International and Interdisciplinary Workshop on Children & Recommender Systems (KidRec ’21), at IDC 2021, Jun 27, 2021. arXiv:2105.09296. NSF PAR 10335669. Cited 13 times. Cited 6 times.

, , and . 2021. Evaluating Recommenders with Distributions. In Proceedings of the RecSys 2021 Workshop on Perspectives on the Evaluation of Recommender Systems (RecSys ’21), Sep 25, 2021. Cited 2 times.

, , , , , and . 2021. Estimation of Fair Ranking Metrics with Incomplete Judgments. In Proceedings of The Web Conference 2021 (TheWebConf 2021), Apr 19, 2021. ACM, pp. 1065–1075. DOI 10.1145/3442381.3450080. arXiv:2108.05152. NSF PAR 10237411. Acceptance rate: 21%. Cited 55 times. Cited 38 times.

Projects

Funding

- NSF CAREER award

- Boise State University College of Education Civility Grant

Other Work Cited

- Franklin, Ursula M. 2004. The Real World of Technology. Revised Edition. CBC Massey Lectures (1989). Toronto, Ont.; Berkeley, CA: House of Anansi Press.

- Green, B and Viljoen, S. 2020. Algorithmic Realism: Expanding the Boundaries of Algorithmic Thought. In Conference on Fairness, Accountability, and Transparency (FAT* ’20) doi:10.1145/3351095.3372840.

- Friedler, Sorelle A., Carlos Scheidegger, and Suresh Venkatasubramanian. 2021. “The (Im)possibility of Fairness: Different Value Systems Require Different Mechanisms for Fair Decision Making.” Communications of the ACM 64 (4): 136–43. doi:10.1145/3433949.

- Mitchell, Shira, Eric Potash, Solon Barocas, Alexander D’Amour, and Kristian Lum. 2020. “Algorithmic Fairness: Choices, Assumptions, and Definitions.” Annual Review of Statistics and Its Application 8 (November). doi:10.1146/annurev-statistics-042720-125902.

- Chouldechova, Alexandra. 2017. “Fair Prediction with Disparate Impact: A Study of Bias in Recidivism Prediction Instruments.” Big Data 5 (2): 153–63. doi:10.1089/big.2016.0047.

- Binns, Reuben. 2020. “On the Apparent Conflict between Individual and Group Fairness.” In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, 514–24. FAT* ’20. doi:10.1145/3351095.3372864.

- Rishabh Mehrotra, Ashton Anderson, Fernando Diaz, Amit Sharma, Hanna Wallach, and Emine Yilmaz. 2017. “Auditing Search Engines for Differential Satisfaction Across Demographics.” In Proceedings of the 26th International Conference on World Wide Web Companion, 626–33. doi:10.1145/3041021.3054197.

- Harambam, Jaron, Dimitrios Bountouridis, Mykola Makhortykh, and Joris van Hoboken. 2019. “Designing for the Better by Taking Users into Account: A Qualitative Evaluation of User Control Mechanisms in (news) Recommender Systems.” In Proceedings of the 13th ACM Conference on Recommender Systems, 69–77. RecSys ’19. doi:10.1145/3298689.3347014.

- Ferraro, Andres, Xavier Serra, and Christine Bauer. 2021. “What Is Fair? Exploring the Artists’ Perspective on the Fairness of Music Streaming Platforms.” In Proceedings of the 18th IFIP International Conference on Human-Computer Interaction (INTERACT 2021). http://arxiv.org/abs/2106.02415.