Serendipity at the Library

It’s hard, I think, for a recommender system to beat a good library shelf. The wonderful serendipity of browsing the stacks of books, be they the New Arrivals rack or section Z1002, is a high bar to match, or at least they’ve been very good to me.

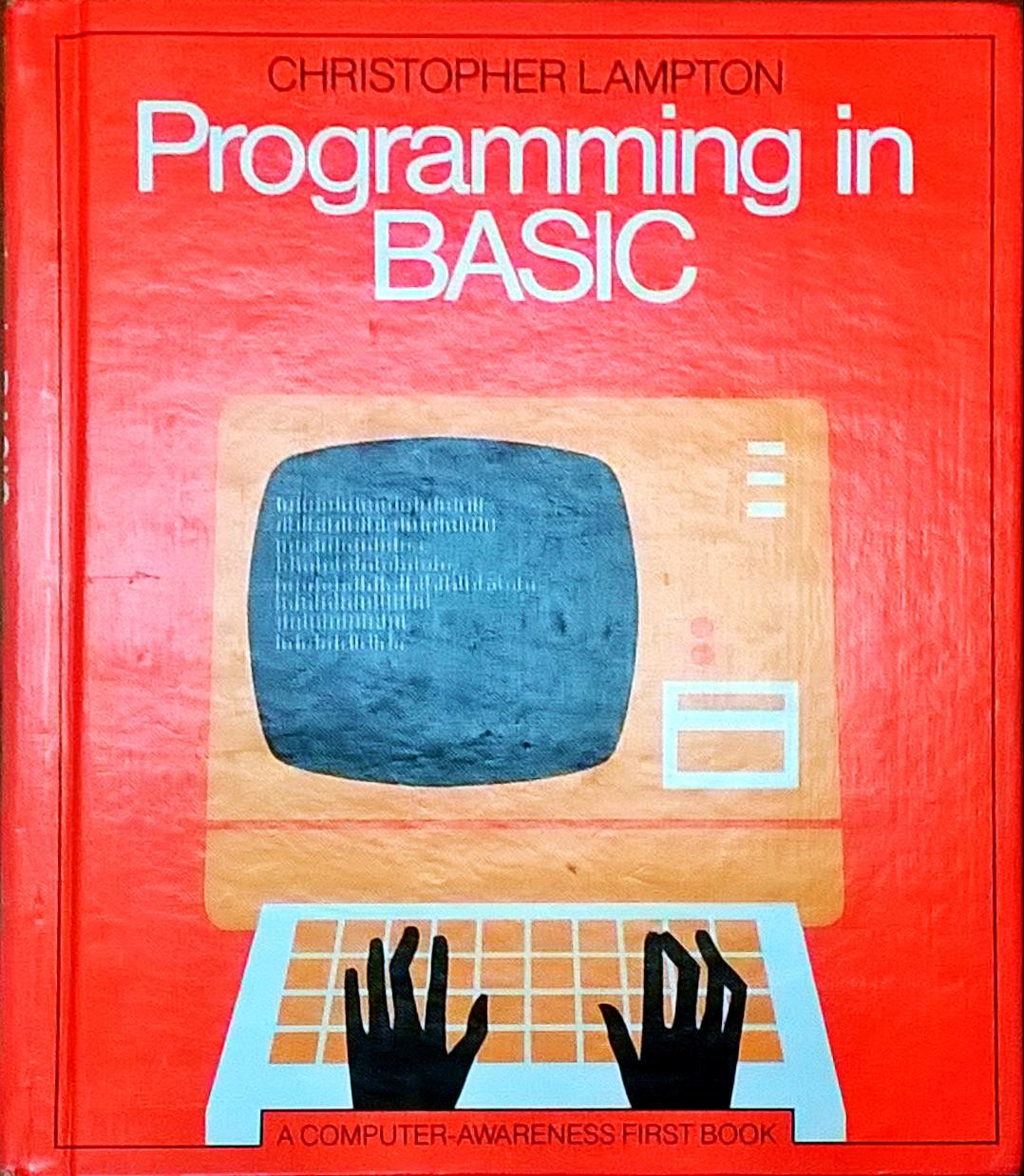

I basically owe my career to finding Christopher Lampton’s Programming in BASIC on the shelves of the children’s nonfiction section of the Pocahontas Public Library1. My programming skill grew through additional books from its shelves, and those of the libraries in larger neighboring towns.

Also at the Pocahontas library, I discovered The Cuckoo’s Egg, one of my favorite books in high school. A footnote therein presented a recipe for chocolate chocolate chip cookies, dubbed ‘Hacker Cookies’ as they entered my family’s repertoire.