Keynote for ROEGEN @ RecSys 2024

These are the resources for my keynote talk, “Responsible Recommendation in the Age of Generative AI”, at the 1st Workshop on Risks, Opportunities, and Evaluation of Generative Models in Recommender Systems (ROEGEN) at RecSys 2024.

- 🌅 Abstract

- 🧑🏻🏫 Slides

- 🔗 References

- 📝 Edited transcript

Abstract

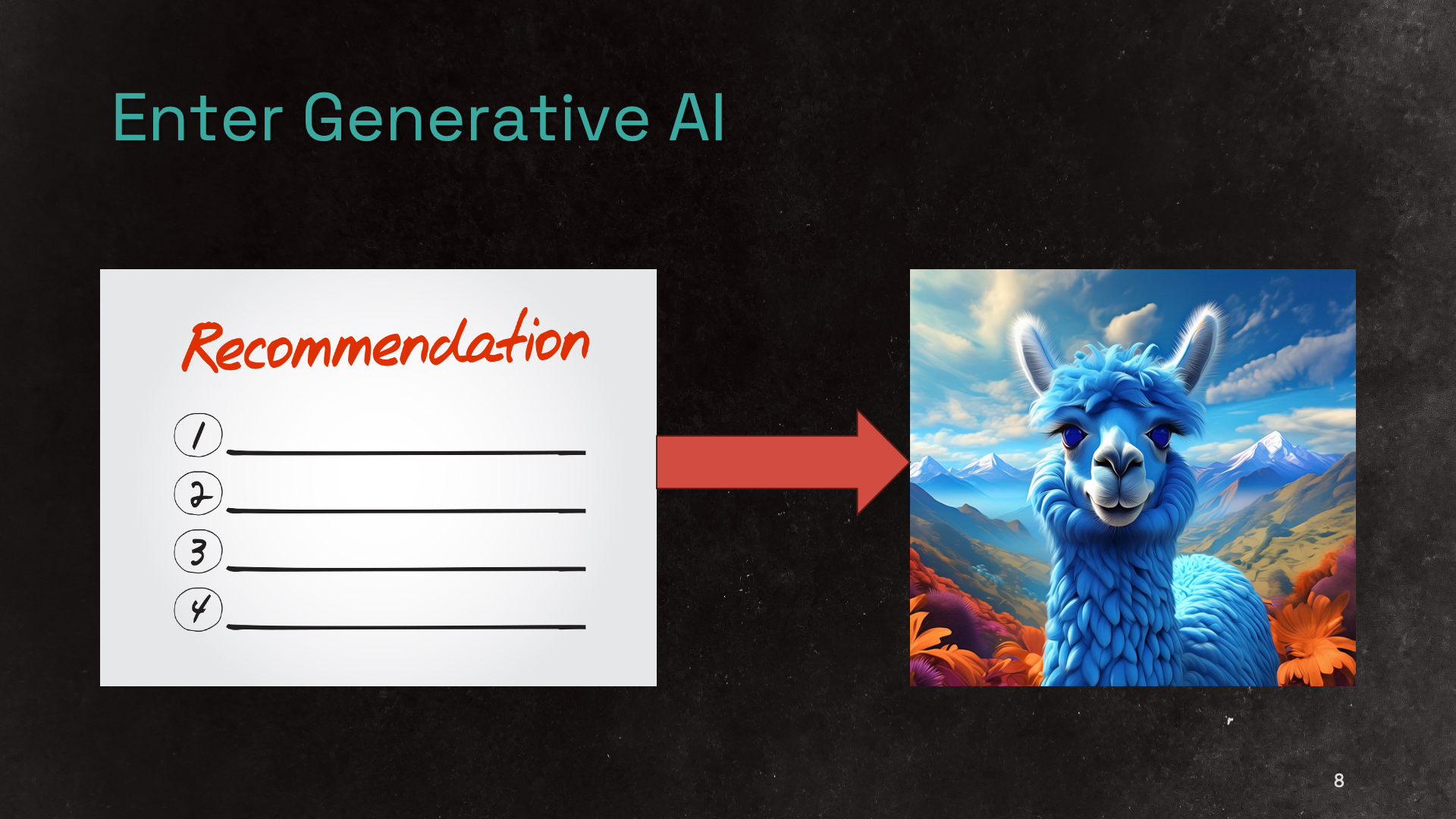

Generative AI is bringing significant upheaval to how we think about and build recommender systems, both in the underlying recommendation techniques and in the design of surrounding applications and user experiences, with potentially profound impact on the social, ethical, and humanitarian impacts of recommendation. Some concepts developed for responsible recommendation, retrieval, and AI can be applied to generative recommendation, while others are substantially complicated or hindered by the significant changes in the relationships between users, creators, items, and platforms that generative AI brings. In this talk, I will argue that thinking clearly and explicitly about the underlying social, legal, and moral principles that responsible recommendation efforts seek to advance will provide guidance for thinking about how best to apply and revise impact concerns in the face of current and future changes to recommendation paradigms and applications.

Slides

References

, , , and . 2022. Fairness in Information Access Systems. Foundations and Trends® in Information Retrieval 16(1–2) (July 2022), 1–177. DOI 10.1561/1500000079. arXiv:2105.05779 [cs.IR]. NSF PAR 10347630. Impact factor: 8. Cited 273 times. Cited 108 times.

, , , and . 2024. Not Just Algorithms: Strategically Addressing Consumer Impacts in Information Retrieval. In Proceedings of the 46th European Conference on Information Retrieval (ECIR ’24, IR for Good track), Mar 24–28, 2024. Lecture Notes in Computer Science 14611:314–335. DOI 10.1007/978-3-031-56066-8_25. NSF PAR 10497110. Acceptance rate: 35.9%. Cited 20 times. Cited 6 times.

, , and . 2024. Distributionally-Informed Recommender System Evaluation. Transactions on Recommender Systems 2(1) (March 2024; online Aug 4, 2023), 6:1–27. DOI 10.1145/3613455. arXiv:2309.05892 [cs.IR]. NSF PAR 10461937. Cited 28 times. Cited 11 times.

, , , , , and . 2024. The Impossibility of Fair LLMs. In HEAL: Human-centered Evaluation and Auditing of Language Models, a non-archival workshop at CHI 2024, May 12, 2024. arXiv:2406.03198v1 [cs.CL]. Citations reported under ACL25.

William Hill, Larry Stead, Mark Rosenstein, and George Furnas. 1995. “Recommending and Evaluating Choices in a Virtual Community of Use.” In Proc. CHI ’95. doi:10.1145/223904.223929.

Su Lin Blodgett and Brendan O’Connor. 2017. Racial Disparity in Natural Language Processing: A Case Study of Social Media African-American English. <arXiv:1707.00061> [cs.CY]. Presented at FATML 2017.

Nicholas J. Belkin and Stephen E. Robertson. 1976. Some ethical and political implications of theoretical research in information science. In Proceedings of the ASIS annual meeting, 1976. https://www.researchgate.net/publication/255563562

Andrew D Selbst, Danah Boyd, Sorelle A Friedler, Suresh Venkatasubramanian, and Janet Vertesi. 2019. Fairness and abstraction in sociotechnical systems. In Proc. FAT* ’19. doi:10.1145/3287560.3287598.

Cynthia Dwork and Christina Ilvento. 2019. Fairness under composition. In Proc. ITCS 2019. doi:10.4230/LIPICS.ITCS.2019.33.